PointGuard AI Unveils AI Discovery and Threat Correlation Across Source Code, AI Pipelines, and AI Resources

Solution connects threats across source code and AI tools to eliminate blind spots and govern AI applications from end to end

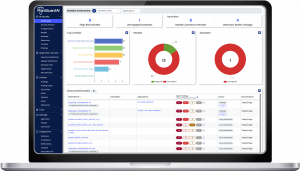

LAS VEGAS, NV, CA, UNITED STATES, August 5, 2025 /EINPresswire.com/ — PointGuard AI, a leader in AI security and governance, today announced an expansion of its platform at Black Hat USA 2025. In an industry first, PointGuard provides AI discovery, threat correlation, and protection across the full AI stack — including source code repositories like GitHub, MLOps pipelines, and critical AI resources such as models, datasets, notebooks, and agents.

With this unmatched visibility, security teams can uncover hidden AI projects and dangerous assets within software development and MLOps environments — and connect the dots across silos to detect new types of threats to AI systems, mitigate risks, and enforce end-to-end governance.

“Our goal is to enable AI innovation — not hinder it, but that requires end-to-end security, and governance across the AI lifecycle,” said Pravin Kothari, Founder and CEO of PointGuard AI. “By extending our powerful discovery and correlation engine to code repositories, we are exposing and securing AI tools before they become embedded in enterprise applications.”

The platform now scans GitHub and other source code repositories to identify AI-related components including models, datasets, notebooks, API calls, and libraries — along with connections to external applications or data sources. This enhanced discovery is tightly integrated with AI security posture management, red teaming, runtime guardrails, policy enforcement, and AI governance — enabling organizations to correlate risks across AI pipelines. Use cases include identifying code that includes illicit AI prompts, detecting prompt injections that might exploit code vulnerabilities, or finding misconfigured agents than can leak sensitive data.

A recent IBM survey found that 13% of organizations have already experienced breaches involving AI models — with 97% of those lacking even basic access controls. In real-world incidents, prompt injections have tricked AI agents into executing destructive tasks such as deleting infrastructure. These risks are amplified when AI tools remain undiscovered and ungoverned.

PointGuard provides discovery and protection for AI pipelines across platforms like Databricks, Azure, AWS, and GCP. With the addition of source code scanning and multi-channel threat correlation, PointGuard now covers the entire AI lifecycle — delivering visibility, control, and compliance from development to deployment.

About PointGuard AI

PointGuard AI provides the industry’s most comprehensive platform for discovering, securing, and governing AI applications, models, agents, and assets. Trusted by enterprises across finance, healthcare, and critical infrastructure, PointGuard helps organizations embrace AI innovation with confidence, visibility, and control.

Willy Leichter

PointGuard AI

email us here

Legal Disclaimer:

EIN Presswire provides this news content “as is” without warranty of any kind. We do not accept any responsibility or liability

for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this

article. If you have any complaints or copyright issues related to this article, kindly contact the author above.

![]()